Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Blog Article

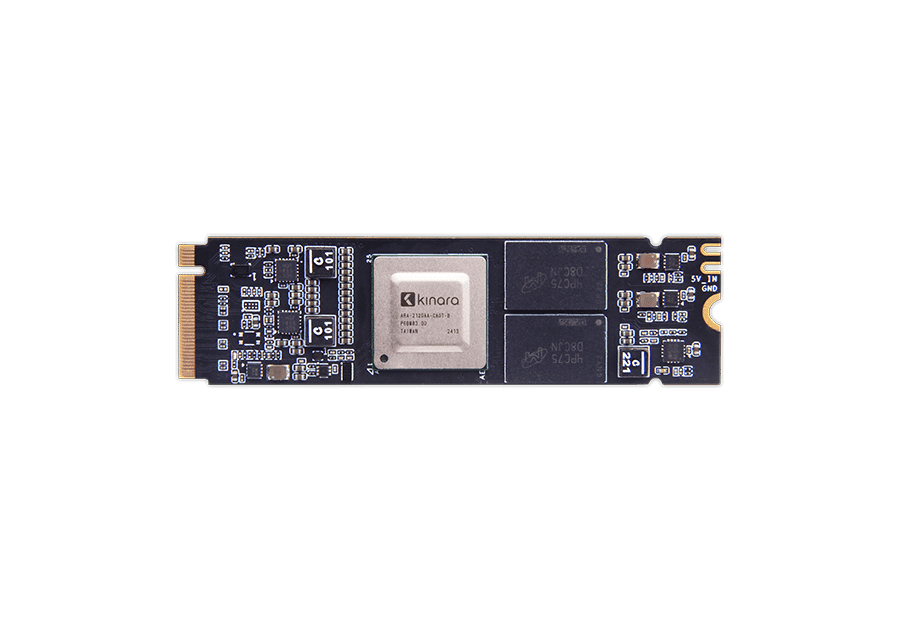

Geniatech M.2 AI Accelerator Module: Compact Power for Real-Time Edge AI

Synthetic intelligence (AI) continues to revolutionize how industries operate, particularly at the side, wherever rapid control and real-time insights are not just attractive but critical. The AI m.2 module has appeared as a tight yet strong option for approaching the requirements of edge AI applications. Offering effective efficiency within a small impact, that component is rapidly operating innovation in from wise cities to commercial automation.

The Need for Real-Time Running at the Edge

Side AI links the hole between people, products, and the cloud by permitting real-time knowledge running where it's most needed. Whether driving autonomous vehicles, smart protection cameras, or IoT sensors, decision-making at the edge must occur in microseconds. Standard computing techniques have confronted issues in checking up on these demands.

Enter the M.2 AI Accelerator Module. By developing high-performance device understanding capabilities into a small sort factor, that tech is reshaping what real-time processing seems like. It offers the rate and efficiency businesses require without counting entirely on cloud infrastructures that can present latency and raise costs.

What Makes the M.2 AI Accelerator Module Stay Out?

• Compact Design

One of the standout characteristics with this AI accelerator module is its compact M.2 form factor. It meets simply into a number of stuck programs, hosts, or edge products without the need for considerable equipment modifications. That makes implementation simpler and a lot more space-efficient than bigger alternatives.

• High Throughput for Device Learning Tasks

Designed with advanced neural network processing abilities, the module delivers impressive throughput for jobs like picture recognition, movie evaluation, and speech processing. The structure assures smooth handling of complicated ML types in real-time.

• Energy Efficient

Energy consumption is a major concern for side products, particularly those who run in rural or power-sensitive environments. The component is enhanced for performance-per-watt while sustaining consistent and reliable workloads, making it ideal for battery-operated or low-power systems.

• Versatile Applications

From healthcare and logistics to clever retail and manufacturing automation, the M.2 AI Accelerator Element is redefining possibilities across industries. For instance, it powers advanced video analytics for intelligent surveillance or helps predictive preservation by analyzing indicator information in commercial settings.

Why Edge AI is Developing Momentum

The increase of side AI is reinforced by rising knowledge sizes and an increasing number of linked devices. Based on new business figures, you can find around 14 thousand IoT units functioning globally, several estimated to exceed 25 thousand by 2030. With this shift, old-fashioned cloud-dependent AI architectures experience bottlenecks like improved latency and privacy concerns.

Side AI reduces these challenges by running knowledge locally, giving near-instantaneous insights while safeguarding user privacy. The M.2 AI Accelerator Element aligns completely with this tendency, enabling organizations to harness the entire possible of edge intelligence without limiting on working efficiency.

Crucial Data Showing their Impact

To know the impact of such technologies, consider these features from new industry studies:

• Development in Side AI Market: The international side AI equipment market is believed to cultivate at a element annual development charge (CAGR) exceeding 20% by 2028. Products like the M.2 AI Accelerator Module are essential for operating that growth.

• Performance Criteria: Laboratories screening AI accelerator modules in real-world situations have shown up to a 40% development in real-time inferencing workloads in comparison to conventional edge processors.

• Ownership Across Industries: About 50% of enterprises deploying IoT devices are anticipated to integrate side AI programs by 2025 to enhance detailed efficiency.

With such numbers underscoring its relevance, the M.2 AI Accelerator Module appears to be not really a instrument but a game-changer in the shift to smarter, quicker, and more scalable edge AI solutions.

Pioneering AI at the Edge

The M.2 AI Accelerator Module shows more than yet another bit of hardware; it's an enabler of next-gen innovation. Organizations adopting this tech may remain ahead of the bend in deploying agile, real-time AI methods completely improved for edge environments. Compact however effective, oahu is the perfect encapsulation of development in the AI revolution.

From its power to method equipment learning models on the fly to its unparalleled flexibility and power efficiency, this element is indicating that edge AI is not a remote dream. It's occurring today, and with methods like this, it's easier than actually to bring smarter, faster AI nearer to where in actuality the action happens. Report this page